Another deep dive on react VDOM - React Fiber architecture

React Fiber architecture was introduced with the release of React 16 in September 2017.

This article explores how React's Fiber tree, Scheduler, and time slicing work together with the browser's event loop to deliver smooth user experiences.

If you have not read my previous article on virtual DOM, you can find it below :

⚛️

React Virtual DOM Deep Dive - Part 1

→

The Problem: Why React Needed Fiber

Before Fiber, React's reconciliation was synchronous and uninterruptible. When state changed, React would:

- Re-render the entire component tree in one go

- Block the main thread until completion

- Cause frame drops and janky interactions

// Pre-Fiber: Synchronous rendering

function legacyRender() {

// This could take 100ms+ and block everything

updateEntireComponentTree();

// Browser can't paint or handle user input during this time

}

// Pre-Fiber: Synchronous rendering

function legacyRender() {

// This could take 100ms+ and block everything

updateEntireComponentTree();

// Browser can't paint or handle user input during this time

}

The main thread would be blocked, causing:

- Dropped frames (below 60fps)

- Delayed user input responses

- Janky animations

Understanding the Browser Event Loop

Before diving into Fiber, let's understand how the browser's event loop works:

// Simplified event loop

while (true) {

// 1. Execute one macrotask from the macrotask queue

if (macrotaskQueue.length > 0) {

const macrotask = macrotaskQueue.shift();

executeMacrotask(macrotask);

// Examples: setTimeout, setInterval, MessageChannel,

// user events (click, scroll), I/O operations

}

// 2. Execute ALL microtasks until queue is empty

while (microtaskQueue.length > 0) {

const microtask = microtaskQueue.shift();

executeMicrotask(microtask);

// Examples: Promise.then(), queueMicrotask(),

// MutationObserver callbacks

}

// 3. Render frame (if needed and time permits)

if (timeForNextFrame() && needsRendering()) {

requestAnimationFrame(callbacks);

performStyleAndLayout();

paint();

composite();

}

// 4. Handle idle time

if (hasIdleTime()) {

executeIdleTasks(); // requestIdleCallback

}

}

// Task queue examples:

const macrotaskQueue = [

{ type: 'setTimeout', callback: () => console.log('timer') },

{ type: 'click', target: button, callback: handleClick },

{ type: 'message', callback: reactSchedulerWork }

];

const microtaskQueue = [

{ type: 'promise', callback: () => console.log('promise resolved') },

{ type: 'queueMicrotask', callback: () => console.log('microtask') }

];

// Simplified event loop

while (true) {

// 1. Execute one macrotask from the macrotask queue

if (macrotaskQueue.length > 0) {

const macrotask = macrotaskQueue.shift();

executeMacrotask(macrotask);

// Examples: setTimeout, setInterval, MessageChannel,

// user events (click, scroll), I/O operations

}

// 2. Execute ALL microtasks until queue is empty

while (microtaskQueue.length > 0) {

const microtask = microtaskQueue.shift();

executeMicrotask(microtask);

// Examples: Promise.then(), queueMicrotask(),

// MutationObserver callbacks

}

// 3. Render frame (if needed and time permits)

if (timeForNextFrame() && needsRendering()) {

requestAnimationFrame(callbacks);

performStyleAndLayout();

paint();

composite();

}

// 4. Handle idle time

if (hasIdleTime()) {

executeIdleTasks(); // requestIdleCallback

}

}

// Task queue examples:

const macrotaskQueue = [

{ type: 'setTimeout', callback: () => console.log('timer') },

{ type: 'click', target: button, callback: handleClick },

{ type: 'message', callback: reactSchedulerWork }

];

const microtaskQueue = [

{ type: 'promise', callback: () => console.log('promise resolved') },

{ type: 'queueMicrotask', callback: () => console.log('microtask') }

];

For 60fps, the browser has approximately 16.67ms per frame. If JavaScript execution takes longer than this, frames get dropped.

The Fiber Architecture: React's Solution

Fiber transforms React into a cooperative multitasking system. Instead of blocking the main thread, React:

- Breaks work into small units (fibers)

- Yields control back to the browser periodically

- Resumes work when the browser is ready

Fiber Node Structure

Each element in your React tree becomes a fiber node:

// Simplified fiber node structure

const fiberNode = {

// Component information

type: 'div', // or function/class component

key: null,

props: { className: 'container' },

// Tree structure

child: null, // First child fiber

sibling: null, // Next sibling fiber

return: null, // Parent fiber

// Work tracking

lanes: 0b0010000, // Which update lanes this fiber has work in

childLanes: 0b0010000, // Which lanes have work in subtree

flags: Update | Placement, // What work needs to be done

// State management

memoizedState: null, // Current state/hooks

pendingProps: null, // New props to process

memoizedProps: null, // Previously rendered props

updateQueue: null, // Pending updates

// References

stateNode: domElement, // DOM node or component instance

alternate: null, // Current ↔ work-in-progress fiber

};

// Simplified fiber node structure

const fiberNode = {

// Component information

type: 'div', // or function/class component

key: null,

props: { className: 'container' },

// Tree structure

child: null, // First child fiber

sibling: null, // Next sibling fiber

return: null, // Parent fiber

// Work tracking

lanes: 0b0010000, // Which update lanes this fiber has work in

childLanes: 0b0010000, // Which lanes have work in subtree

flags: Update | Placement, // What work needs to be done

// State management

memoizedState: null, // Current state/hooks

pendingProps: null, // New props to process

memoizedProps: null, // Previously rendered props

updateQueue: null, // Pending updates

// References

stateNode: domElement, // DOM node or component instance

alternate: null, // Current ↔ work-in-progress fiber

};

Why Both alternate and stateNode References Are Needed ?

(1) alternate - Links between React's two fiber trees (current vs work-in-progress)

- Used for comparing old vs new state/props

- Enables React's diffing algorithm

- Purely internal to React's reconciliation

(2) stateNode - Direct reference to the actual DOM element

- Shared between both fiber trees (same DOM node)

- Used for actual DOM mutations during commit phase

- The bridge between React's virtual representation and real DOM

Double Buffering: Current vs Work-in-Progress

React maintains two fiber trees:

// Current tree (what's on screen)

const currentTree = {

// Represents currently rendered UI

};

// Work-in-progress tree (being built)

const workInProgressTree = {

// New version being constructed

alternate: currentTree // Points back to current

};

currentTree.alternate = workInProgressTree;

// Current tree (what's on screen)

const currentTree = {

// Represents currently rendered UI

};

// Work-in-progress tree (being built)

const workInProgressTree = {

// New version being constructed

alternate: currentTree // Points back to current

};

currentTree.alternate = workInProgressTree;

The React Scheduler: Priority-Based Task Management

The Scheduler is a separate package that manages when React gets CPU time:

import { scheduleCallback, shouldYield } from 'scheduler';

// Different priority levels

const ImmediatePriority = 1; // sync/error boundaries

const UserBlockingPriority = 2; // user interactions

const NormalPriority = 3; // default updates

const LowPriority = 4; // data fetching

const IdlePriority = 5; // offscreen content

import { scheduleCallback, shouldYield } from 'scheduler';

// Different priority levels

const ImmediatePriority = 1; // sync/error boundaries

const UserBlockingPriority = 2; // user interactions

const NormalPriority = 3; // default updates

const LowPriority = 4; // data fetching

const IdlePriority = 5; // offscreen content

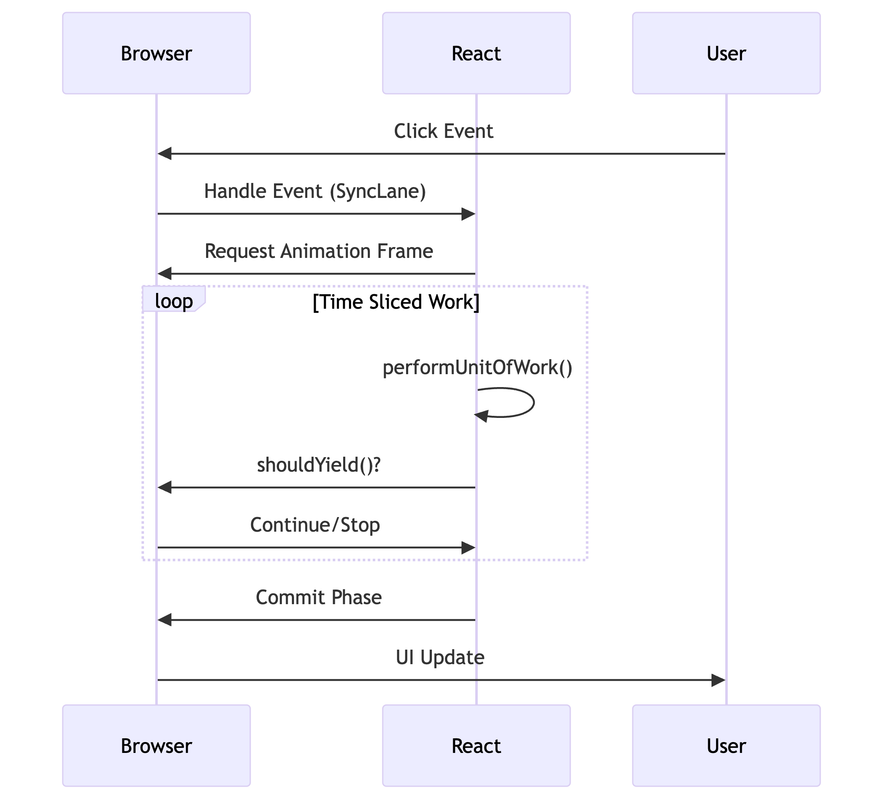

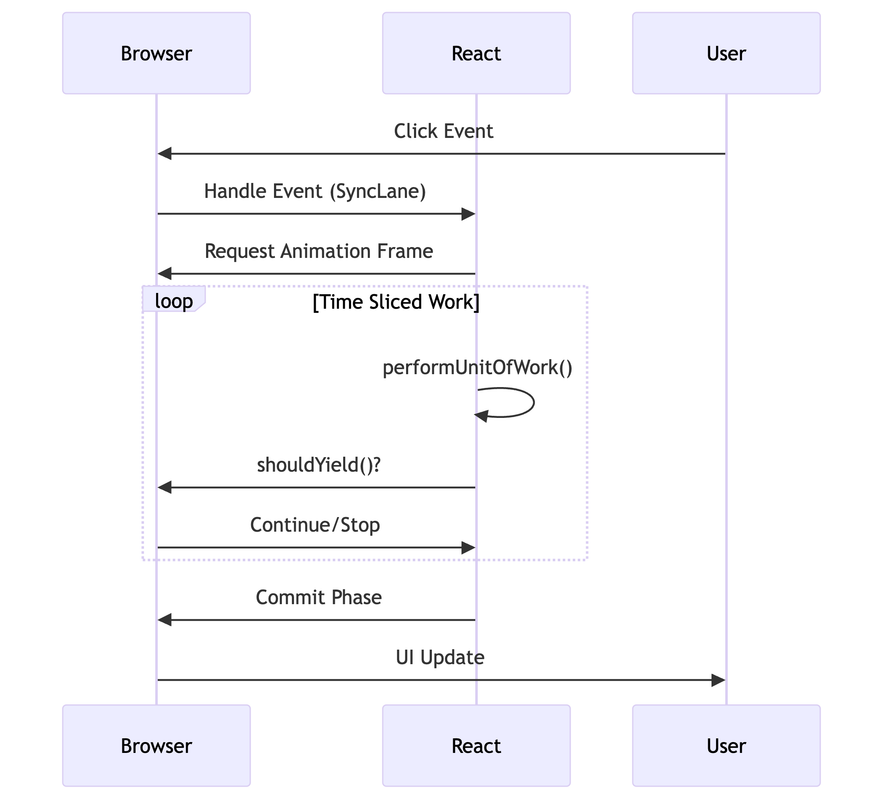

How Scheduler Works with Event Loop ?

The Scheduler doesn't just manage priorities - it coordinates with the browser's event loop to ensure React doesn't block the main thread. Here's how it integrates:

// Scheduler uses MessageChannel for scheduling

const channel = new MessageChannel();

const port1 = channel.port1;

const port2 = channel.port2;

// This creates a macrotask that runs in the next event loop iteration

port1.onmessage = () => {

// This callback runs as a macrotask

// After all current synchronous code and microtasks complete

flushScheduledWork();

};

function scheduleCallback(priorityLevel, callback) {

const currentTime = getCurrentTime();

const startTime = currentTime;

// Calculate when this task should expire based on priority

let timeout;

switch (priorityLevel) {

case ImmediatePriority:

timeout = -1; // Already expired - run ASAP

break;

case UserBlockingPriority:

timeout = 250; // 250ms before considered expired

break;

case NormalPriority:

timeout = 5000; // 5 seconds

break;

case LowPriority:

timeout = 10000; // 10 seconds

break;

case IdlePriority:

timeout = maxSigned31BitInt; // Never expires

break;

}

const expirationTime = startTime + timeout;

const newTask = {

id: taskIdCounter++,

callback,

priorityLevel,

startTime,

expirationTime,

sortIndex: -1

};

// Add to appropriate queue based on timing

if (startTime > currentTime) {

// Future task - add to timer queue

newTask.sortIndex = startTime;

push(timerQueue, newTask);

// Set up timer to move to task queue when ready

if (peek(taskQueue) === null && newTask === peek(timerQueue)) {

cancelHostTimeout();

requestHostTimeout(handleTimeout, startTime - currentTime);

}

} else {

// Immediate task - add to task queue

newTask.sortIndex = expirationTime;

push(taskQueue, newTask);

// Schedule work if not already scheduled

if (!isHostCallbackScheduled && !isPerformingWork) {

isHostCallbackScheduled = true;

requestHostCallback(flushWork);

}

}

return newTask;

}

function requestHostCallback(callback) {

scheduledHostCallback = callback;

if (!isMessageLoopRunning) {

isMessageLoopRunning = true;

// This posts a message, creating a macrotask

port2.postMessage(null);

}

}

// Why MessageChannel instead of setTimeout?

// 1. setTimeout has minimum 4ms delay (clamped by browser)

// 2. MessageChannel executes immediately in next event loop

// 3. More predictable timing for React's scheduling

// Scheduler uses MessageChannel for scheduling

const channel = new MessageChannel();

const port1 = channel.port1;

const port2 = channel.port2;

// This creates a macrotask that runs in the next event loop iteration

port1.onmessage = () => {

// This callback runs as a macrotask

// After all current synchronous code and microtasks complete

flushScheduledWork();

};

function scheduleCallback(priorityLevel, callback) {

const currentTime = getCurrentTime();

const startTime = currentTime;

// Calculate when this task should expire based on priority

let timeout;

switch (priorityLevel) {

case ImmediatePriority:

timeout = -1; // Already expired - run ASAP

break;

case UserBlockingPriority:

timeout = 250; // 250ms before considered expired

break;

case NormalPriority:

timeout = 5000; // 5 seconds

break;

case LowPriority:

timeout = 10000; // 10 seconds

break;

case IdlePriority:

timeout = maxSigned31BitInt; // Never expires

break;

}

const expirationTime = startTime + timeout;

const newTask = {

id: taskIdCounter++,

callback,

priorityLevel,

startTime,

expirationTime,

sortIndex: -1

};

// Add to appropriate queue based on timing

if (startTime > currentTime) {

// Future task - add to timer queue

newTask.sortIndex = startTime;

push(timerQueue, newTask);

// Set up timer to move to task queue when ready

if (peek(taskQueue) === null && newTask === peek(timerQueue)) {

cancelHostTimeout();

requestHostTimeout(handleTimeout, startTime - currentTime);

}

} else {

// Immediate task - add to task queue

newTask.sortIndex = expirationTime;

push(taskQueue, newTask);

// Schedule work if not already scheduled

if (!isHostCallbackScheduled && !isPerformingWork) {

isHostCallbackScheduled = true;

requestHostCallback(flushWork);

}

}

return newTask;

}

function requestHostCallback(callback) {

scheduledHostCallback = callback;

if (!isMessageLoopRunning) {

isMessageLoopRunning = true;

// This posts a message, creating a macrotask

port2.postMessage(null);

}

}

// Why MessageChannel instead of setTimeout?

// 1. setTimeout has minimum 4ms delay (clamped by browser)

// 2. MessageChannel executes immediately in next event loop

// 3. More predictable timing for React's scheduling

Time Slicing: The Heart of Concurrent React

Time slicing allows React to pause and resume work:

function workLoopConcurrent() {

// Continue working while we have time

while (workInProgress !== null && !shouldYield()) {

performUnitOfWork(workInProgress);

}

// shouldYield() returned true - pause here!

// workInProgress still points to current fiber

// We can resume later

}

function shouldYield() {

// Check if we've used our time slice (~5ms)

return getCurrentTime() >= deadline;

}

function workLoopConcurrent() {

// Continue working while we have time

while (workInProgress !== null && !shouldYield()) {

performUnitOfWork(workInProgress);

}

// shouldYield() returned true - pause here!

// workInProgress still points to current fiber

// We can resume later

}

function shouldYield() {

// Check if we've used our time slice (~5ms)

return getCurrentTime() >= deadline;

}

The Complete Render Process

Let's trace through a complete render with time slicing:

function App() {

const [count, setCount] = useState(0);

const [items, setItems] = useState([]);

return (

<div>

<h1>Count: {count}</h1>

<button onClick={() => setCount(count + 1)}>

Increment

</button>

<ExpensiveList items={items} />

</div>

);

}

function App() {

const [count, setCount] = useState(0);

const [items, setItems] = useState([]);

return (

<div>

<h1>Count: {count}</h1>

<button onClick={() => setCount(count + 1)}>

Increment

</button>

<ExpensiveList items={items} />

</div>

);

}

Step 1: Update Scheduling

// User clicks button

function handleClick() {

setCount(count + 1); // Creates update object

}

// Update object created

const update = {

lane: SyncLane, // High priority for user interaction

action: count + 1, // New state value

next: null // Part of circular linked list

};

// Added to component's hook queue

componentFiber.memoizedState.queue.pending = update;

// User clicks button

function handleClick() {

setCount(count + 1); // Creates update object

}

// Update object created

const update = {

lane: SyncLane, // High priority for user interaction

action: count + 1, // New state value

next: null // Part of circular linked list

};

// Added to component's hook queue

componentFiber.memoizedState.queue.pending = update;

All updates are stored in a circular linked list on the fiber. The linked list is processed during the render phase.

The circular structure elegantly solves the "queue management" problem:

- Linear lists require separate head/tail pointers

- Arrays have resizing overhead and expensive operations

- Circular lists need only one pointer and provide O(1) operations

This design choice reflects React's focus on performance optimization at the micro level - even something as simple as how updates are stored can have significant impact when you're processing thousands of state changes per second in a complex application.

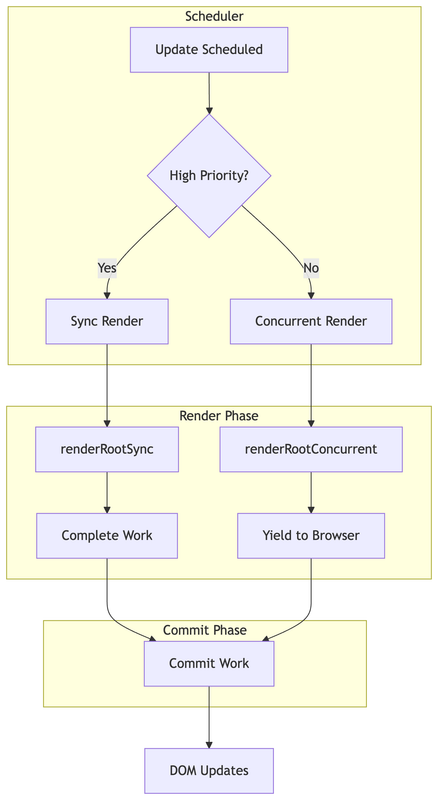

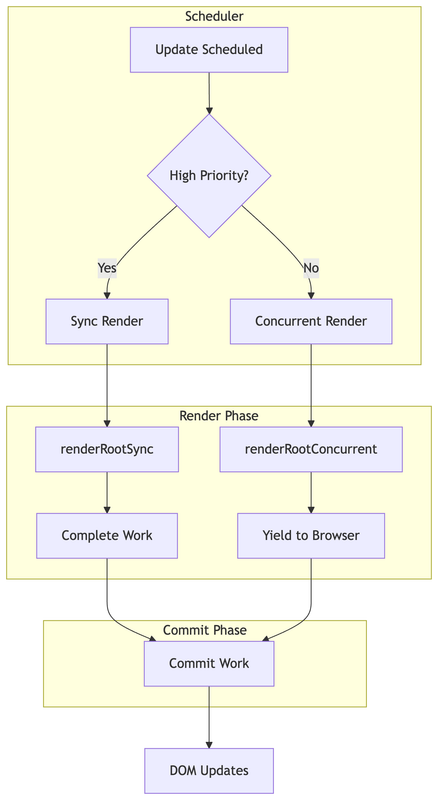

Step 2: Root Scheduling Decision

function ensureRootIsScheduled(root) {

const nextLanes = getNextLanes(root); // 0b0000001 (SyncLane)

if (includesSyncLane(nextLanes)) {

// High priority - don't use scheduler time slicing

scheduleSyncCallback(performSyncWorkOnRoot.bind(null, root));

return;

}

// For lower priority updates

const schedulerPriority = lanesToSchedulerPriority(nextLanes);

root.callbackNode = scheduleCallback(

schedulerPriority,

performConcurrentWorkOnRoot.bind(null, root)

);

}

function ensureRootIsScheduled(root) {

const nextLanes = getNextLanes(root); // 0b0000001 (SyncLane)

if (includesSyncLane(nextLanes)) {

// High priority - don't use scheduler time slicing

scheduleSyncCallback(performSyncWorkOnRoot.bind(null, root));

return;

}

// For lower priority updates

const schedulerPriority = lanesToSchedulerPriority(nextLanes);

root.callbackNode = scheduleCallback(

schedulerPriority,

performConcurrentWorkOnRoot.bind(null, root)

);

}

Step 3: Work Loop Execution

function performConcurrentWorkOnRoot(root) {

const lanes = getNextLanes(root);

// Should we time slice this work?

const shouldTimeSlice = !includesBlockingLane(lanes);

if (shouldTimeSlice) {

// Interruptible rendering

renderRootConcurrent(root, lanes);

} else {

// Non-interruptible rendering

renderRootSync(root, lanes);

}

}

function renderRootConcurrent(root, lanes) {

// Prepare work-in-progress tree

prepareFreshStack(root, lanes);

do {

try {

workLoopConcurrent();

break;

} catch (thrownValue) {

handleError(root, thrownValue);

}

} while (true);

}

function performConcurrentWorkOnRoot(root) {

const lanes = getNextLanes(root);

// Should we time slice this work?

const shouldTimeSlice = !includesBlockingLane(lanes);

if (shouldTimeSlice) {

// Interruptible rendering

renderRootConcurrent(root, lanes);

} else {

// Non-interruptible rendering

renderRootSync(root, lanes);

}

}

function renderRootConcurrent(root, lanes) {

// Prepare work-in-progress tree

prepareFreshStack(root, lanes);

do {

try {

workLoopConcurrent();

break;

} catch (thrownValue) {

handleError(root, thrownValue);

}

} while (true);

}

Step 4: Fiber Tree Traversal

function workLoopConcurrent() {

while (workInProgress !== null && !shouldYield()) {

performUnitOfWork(workInProgress);

}

}

function performUnitOfWork(unitOfWork) {

const current = unitOfWork.alternate;

// Render phase: Create/update fiber

let next = beginWork(current, unitOfWork, renderLanes);

if (next === null) {

// No child work, complete this unit

completeUnitOfWork(unitOfWork);

} else {

// Continue with child

workInProgress = next;

}

}

function workLoopConcurrent() {

while (workInProgress !== null && !shouldYield()) {

performUnitOfWork(workInProgress);

}

}

function performUnitOfWork(unitOfWork) {

const current = unitOfWork.alternate;

// Render phase: Create/update fiber

let next = beginWork(current, unitOfWork, renderLanes);

if (next === null) {

// No child work, complete this unit

completeUnitOfWork(unitOfWork);

} else {

// Continue with child

workInProgress = next;

}

}

Conclusion

React's Fiber architecture represents a fundamental shift in how JavaScript frameworks handle rendering. By implementing cooperative multitasking, priority-based scheduling, and time slicing, React ensures that user interfaces remain responsive even under heavy computational loads.

The key insights are:

- Fiber breaks work into interruptible units - enabling smooth UIs

- Scheduler manages priority and timing - ensuring important updates happen first

- Time slicing prevents main thread blocking - maintaining 60fps performance

- Lane-based priorities optimize user experience - immediate feedback for interactions, background processing for heavy work

Understanding these concepts helps you write more performant React applications and debug performance issues more effectively. The future of web applications lies in this cooperative approach to rendering, where the framework works with the browser rather than against it.

Modern React isn't just a library anymore - it's a sophisticated rendering engine that rivals native platforms in its ability to deliver smooth, responsive user experiences. The Fiber architecture is the foundation that makes it all possible.